Adversarial Filters

Adversarial Filters

An ongoing design experiment exploring how computer vision systems can be confused, distorted, or interrupted through aesthetics. Drawing from adversarial machine-learning attacks, anti-surveillance fashion, and AR prototyping, this project asks a simple question: What does resistance look like when machines are the ones doing the looking?

Created under the advisement of Prof. Franziska Schreiber – Universität der Künste Berlin

The visual language of the project builds on:

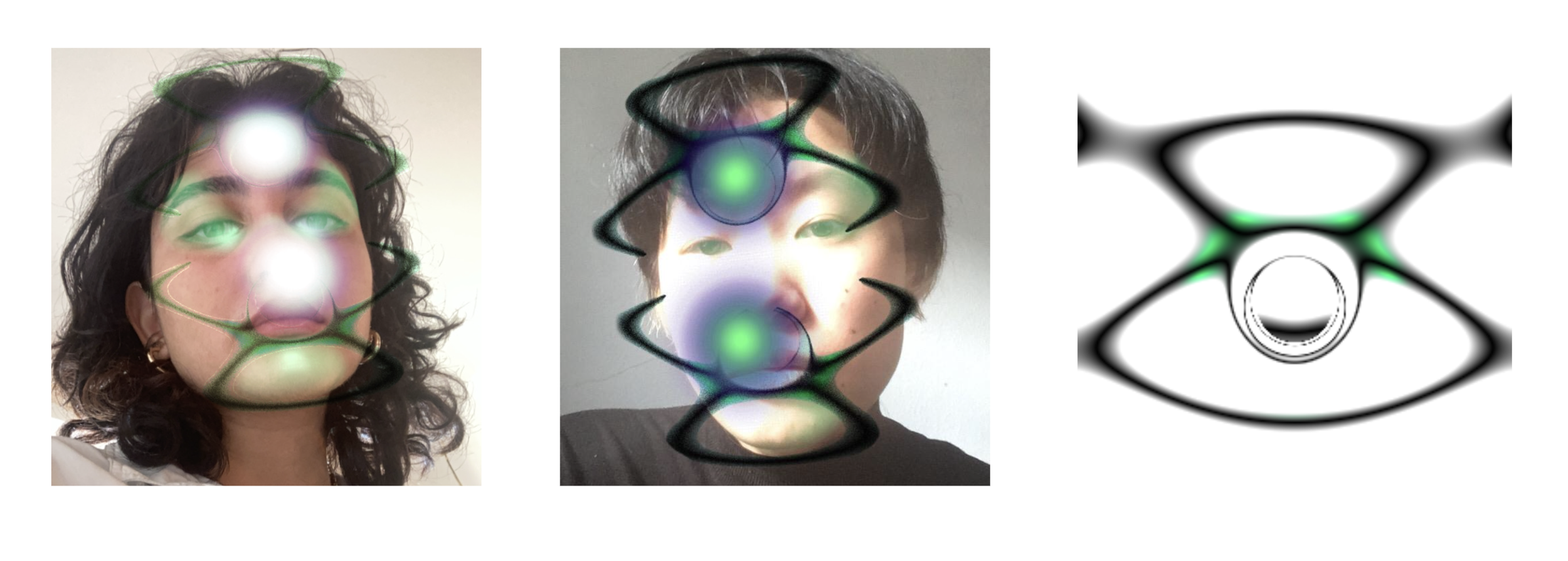

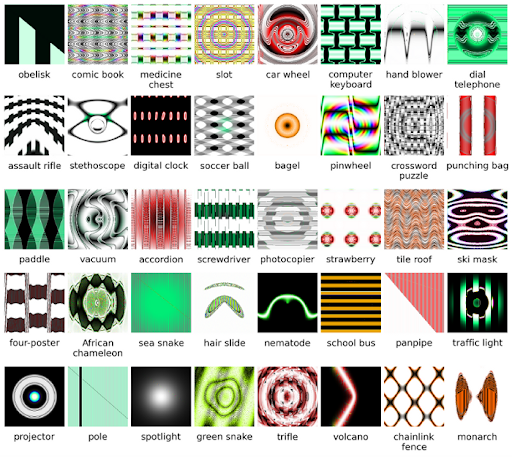

Adversarial patterns that exploit model weaknesses (Nguyen, Yosinski, Clune, CVPR ’15);

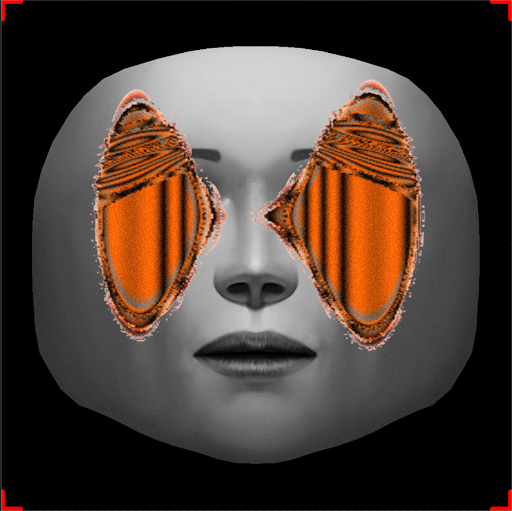

CVDazzle-style face-obscuring designs (J. Hong, A. Harvey);

Computer vision research demonstrating how textures, occlusions, and small perturbations mislead recognition systems (Bhagavatula et al., CMU).

Instead of hiding the face, Adversarial Filters uses playful, almost fashion-editorial graphics—bagels, nematodes, monarch butterflies, stethoscopes, over-saturated geometric fragments—to create algorithmic confusion embedded in everyday aesthetics.

Nguyen A, Yosinski J, Clune J. Deep Neural Networks are Easily Fooled: High Confidence Predictions for Unrecognizable Images. In Computer Vision and Pattern Recognition (CVPR ’15), 2015.

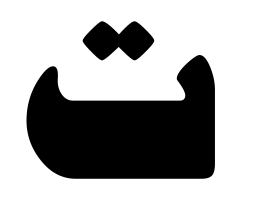

As part of the process, I translated dense academic research on adversarial machine-learning attacks into an accessible, adaptable digital format. Using Spark AR, Instagram’s filter-creation tool, I reinterpreted adversarial patterns from computer-vision papers into visual assets that could live in everyday social media environments. Each pattern was altered, stylized, and composited to preserve its adversarial logic while making it wearable, expressive, and easy for non-experts to test. When overlaid onto the face, these modified adversarial patterns intentionally break or confuse face-detection pipelines.

Adversarial Filters is really just about giving people a fun way to feel more in control of how they show up online. By turning adversarial research into playful AR looks, the project lets anyone try out what it feels like to confuse the algorithm a little (and maybe protect their digital image in the process). It’s a light, creative reminder that we’re not totally powerless in the systems that watch us.